Power Capacity and the Future of AI

As artificial intelligence evolves into a full-scale industrial ecosystem, it is also consuming an unprecedented amount of electricity. Whether it is generative models, large language models (LLMs), or multimodal agents, their operation within data centers relies on a common physical foundation: power capacity.

Behind the rapid progress of AI, energy consumption has become a growing concern. Some have noted that “the endgame of AI is compute, and the endgame of compute is power.” As models expand and real-time inference becomes the norm, the industry faces a new and pressing question: Do we have enough electricity to power it all?

The Exponential Rise in AI Power Demand

According to the International Energy Agency (IEA), global data center electricity consumption is expected to double by 2030, reaching around 945 terawatt-hours (TWh) annually. Among them, AI-optimized high-performance data centers may consume up to four times more electricity than they do today equivalent to adding the annual power consumption of a medium-sized developed country to the global grid.

Traditional data centers typically consume between 10 and 25 megawatts (MW), while hyperscale AI-focused facilities can exceed 100 MW, with annual usage comparable to that of 100,000 households. These AI-centric facilities continue to expand rapidly to accommodate ever-larger models and rising demand for AI services.

Training GPT-3 reportedly consumed roughly 1.3 gigawatt-hours (GWh) of electricity. Generating a medium-length response of around 1,000 tokens with GPT-5 consumes an average of 18.35 watt-hours (Wh) equivalent to running a microwave oven for about 76 seconds and can reach up to 40 Wh, nearly 8.6 times higher than GPT-4’s 2.12 Wh. Every new generation of AI models, especially those with hundreds of billions of parameters, requires longer training cycles, denser GPU clusters, and sustained high power draw.

In short, electricity has become the new currency of computation.

How Power Capacity Shapes AI Development

“Speed-to-Power” has emerged as a key metric of competitiveness. In the global race for AI infrastructure, the speed at which a data center can secure and activate a stable power supply often determines how fast AI capacity can be deployed. Even with sufficient land, cooling, and connectivity, projects can stall if grid access is delayed.

The expansion of electricity generation and transmission infrastructure remains slow. From planning and permitting to construction and operation, the process can take years, while AI demand grows on a quarterly cycle. Utilities must balance the needs of data centers with stable residential and industrial supply. The United States, home to the world’s largest concentration of AI data centers, is already nearing grid stability thresholds in several regions.

AI training workloads can trigger sudden and intense power surges, placing stress on local grids. When massive GPU clusters ramp up simultaneously, they can cause voltage fluctuations, particularly in systems integrated with variable renewable energy. This highlights that significant improvements in AI-related power infrastructure are still required.

Bridging the AI Power Gap

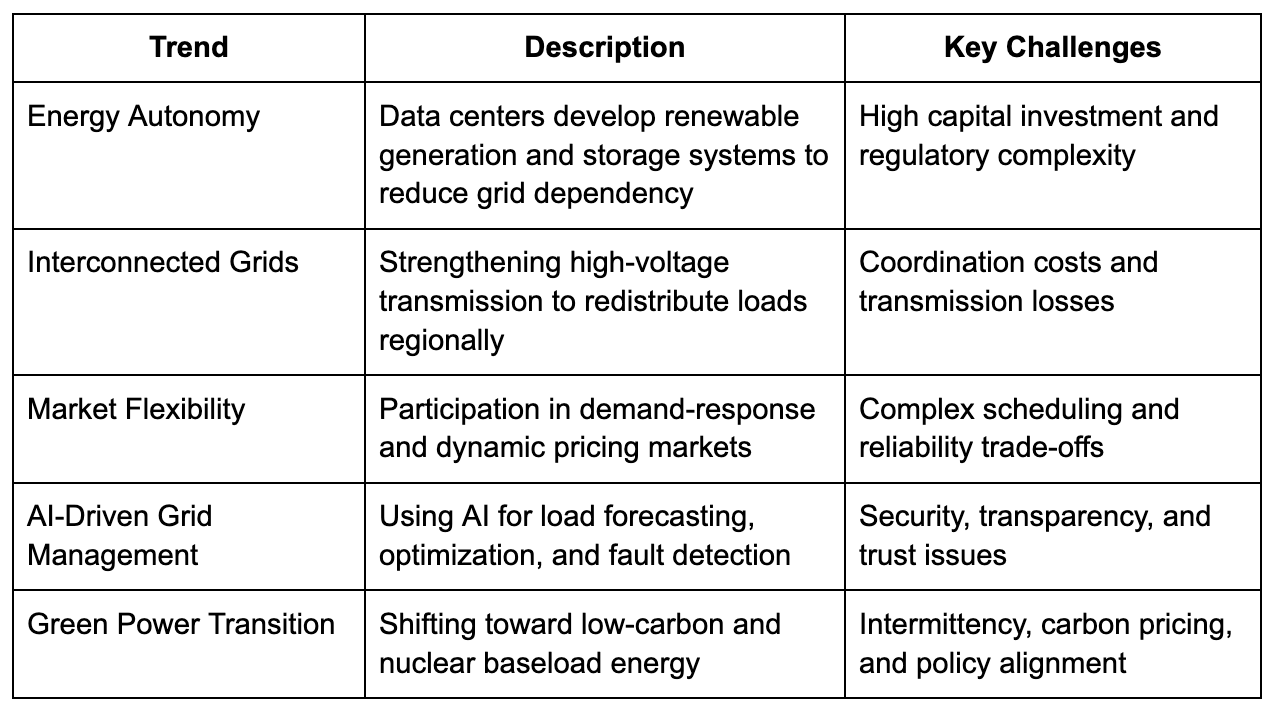

The accelerating power consumption of AI has become a central challenge for enterprises. As electricity availability becomes a defining factor in AI development, many technology companies are enhancing their internal optimization and diversifying their energy strategies to strengthen resilience and reduce reliance on external grid expansion or policy incentives.

The first priority is energy efficiency and computational optimization. Through model compression, sparse computation, quantization, and dynamic scheduling, AI developers are striving to extract more compute per watt. Data center architectures are also evolving, with liquid cooling and high-density rack designs driving continuous improvements in PUE (Power Usage Effectiveness).

The second approach is energy diversification and storage development. Many AI infrastructure operators are deploying distributed generation and on-site storage systems such as campus-scale solar, wind, and battery energy storage systems (BESS) to balance peak loads and increase energy flexibility.

In addition, intelligent power management systems are becoming standard. AI-driven energy monitoring platforms can track real-time power fluctuations across GPU clusters, predict peaks, and automatically adjust supply strategies to achieve simultaneous optimization of computational scheduling and energy efficiency.

Finally, enterprise collaboration is emerging as a key enabler. Leading AI companies and energy providers are co-investing in next-generation power infrastructure, signing long-term power purchase agreements (PPAs), and accelerating the integration of green electricity and regional energy interconnections.

Overall, the industry is shifting from simply scaling compute to achieving smarter energy utilization pursuing higher efficiency and stability in a world of finite electricity.

From Resource to Strategic Asset

Electricity capacity is no longer just a technical metric. It has become a strategic lever connecting digital innovation, industrial strategy, and the global energy transition.

In the coming decade, competition in AI will not only revolve around models or chips but also around who can secure reliable, efficient, and sustainable power the fastest.

Conclusion

As global AI demand accelerates, power capacity has shifted from being a constraint to becoming a strategic advantage. The next breakthroughs in AI will depend not only on better semiconductors and smarter algorithms but also on who can deliver scalable, clean, and resilient power infrastructure.

At Bitdeer AI, we are expanding our dedicated AI power capacity to 200 MW of IT load by 2026 as part of our long-term strategy to ensure sustainable, high-performance infrastructure across our global data center network. We believe that in the era of intelligence, every watt of energy fuels innovation.