Demystifying GPUs for AI Beginners: What You Need to Know

Artificial Intelligence (AI) is revolutionizing industries from finance to healthcare. But behind every high-performing AI system is a hardware powerhouse: the GPU (Graphics Processing Unit) . For newcomers to AI, understanding how GPUs work and why they matter is crucial to building efficient, scalable AI applications. In this guide, we break down the essentials of GPUs for AI beginners, diving deeper than surface-level definitions to give you a practical and foundational understanding.

What Is a GPU?

A GPU is a specialized processor designed for handling multiple operations in parallel. Originally built to render graphics in video games and simulations, GPUs are now the default engines for training and deploying deep learning models. Their architecture is uniquely suited for the mathematical workloads in AI, particularly matrix multiplications and linear algebra.

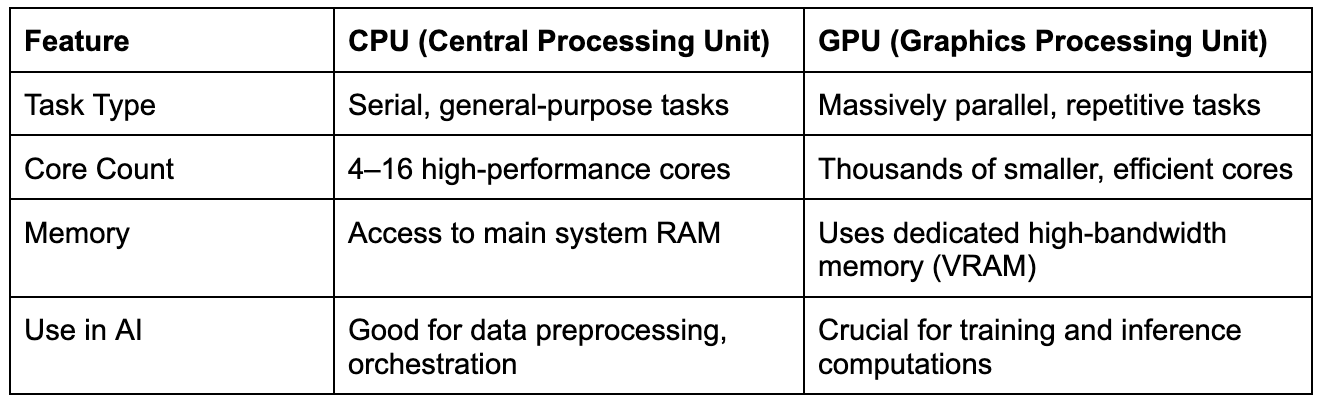

CPU vs GPU: Not a Competition, But a Complement

In AI, GPUs are often paired with CPUs: the CPU coordinates tasks, while the GPU does the heavy mathematical lifting.

Core Concepts of GPUs

If you're aiming to understand or eventually select a GPU for AI use, you'll need to understand the following concepts:

1. CUDA and Tensor Cores

- CUDA Cores: The smallest units of computation in an NVIDIA GPU. Designed for highly parallel workloads like element-wise operations or vector addition.

- Tensor Cores: Introduced in NVIDIA Volta GPUs, these specialize in performing matrix multiplications using mixed precision (FP16/BF16/FP8), drastically accelerating AI workloads.

2. VRAM and Memory Bandwidth

- VRAM: Dedicated memory on the GPU, used to store models, inputs, activations, gradients. Models with billions of parameters (like LLaMA 3) require 40 GB+ of VRAM.

- Memory Bandwidth: Refers to how fast data can be moved between memory and the GPU cores. Bottlenecks here limit model throughput.

3. FLOPs and Throughput

- FLOPs (Floating Point Operations): Used to quantify how many computations a GPU can perform per second.

- Inference vs Training: Training is compute-intensive (e.g., GPT-4o training exceeds hundreds of exaFLOPs); inference focuses more on latency and efficiency.

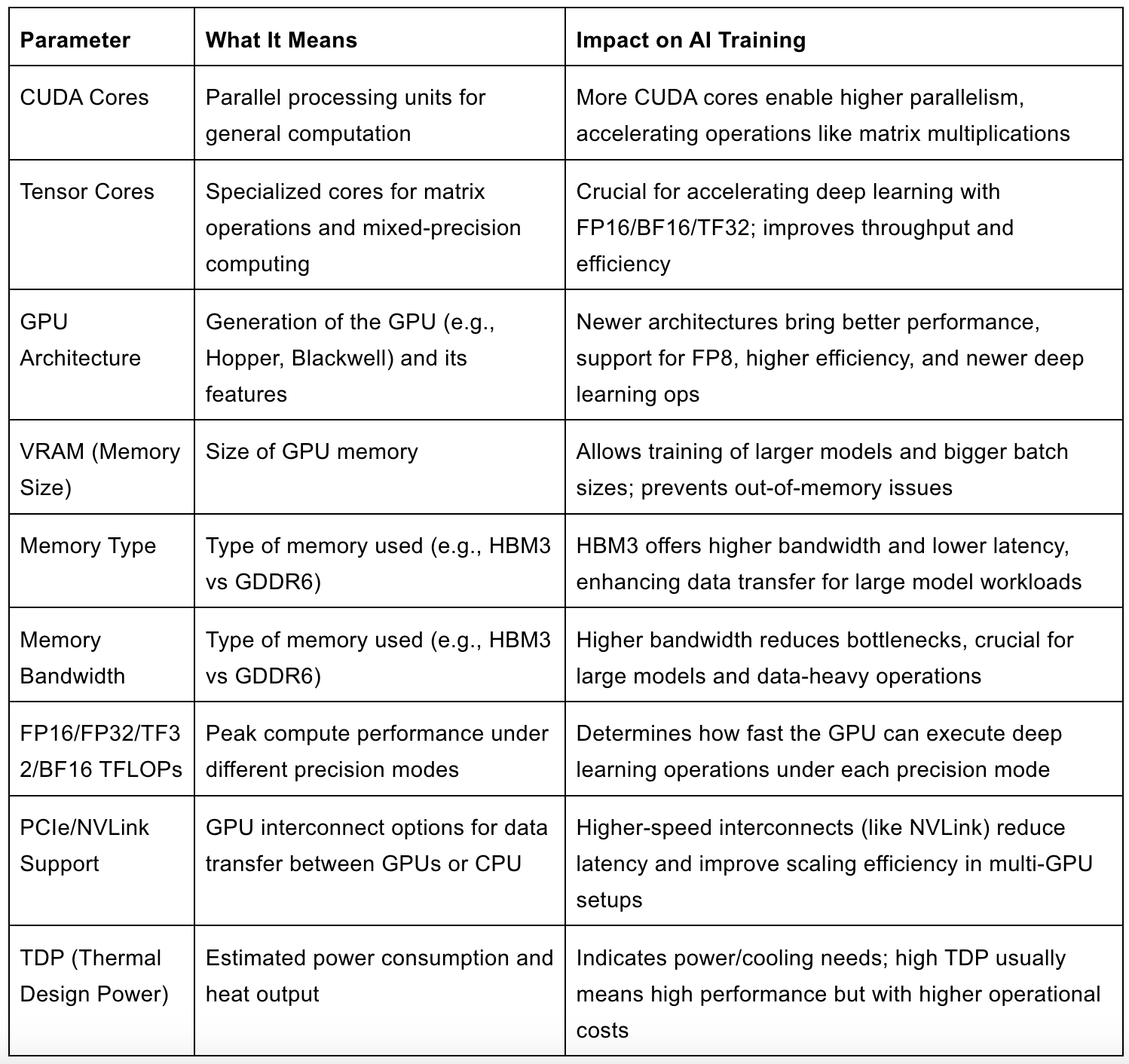

Common GPU Specs You Should Know

When evaluating GPUs for AI use, here are some of the most important specifications to look for:

Understanding these specs will help you match the right GPU to your AI workload, whether it's training LLMs, fine-tuning a vision model, or running edge inference.

The Role of GPUs Across the AI Lifecycle

Training Phase

This is where raw computational power matters most. Training large-scale models involves optimizing millions or even billions of weights over huge datasets.

- Single GPUs: NVIDIA A100, H100, GB200

- Multi-GPU clusters connected with NVLink, InfiniBand, or PCIe

Inference Phase

Inference is about speed, energy efficiency, and cost-effectiveness. Here, smaller or quantized models are used to make predictions in real-time.

- Edge inference: Jetson Nano, NVIDIA Orin

- Cloud inference: T4, L4, A10G, H100

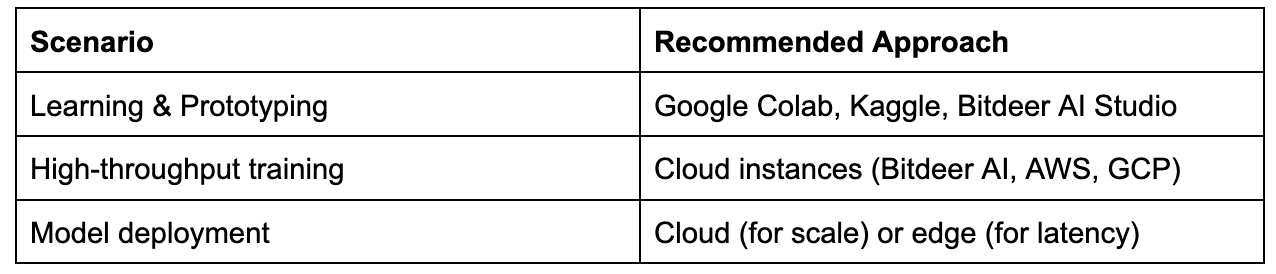

Cloud vs Local GPUs: Which Should You Use?

Bitdeer AI offers containerized access to high-end GPUs like the GB200 NVL72,H200 or H100 via a scalable interface, ideal for both experimentation and production workloads.

Practical GPU Tips for Beginners

- Start with cloud platforms: You don't need to buy a GPU upfront. Use Colab or Bitdeer AI Cloud platform.

- Profile performance: Use nvidia-smi, Nsight Systems, or PyTorch Profiler to monitor memory and compute utilization.

- Optimize batch size: It’s a balancing act. Too large and you’ll run out of VRAM; too small and you waste performance.

- Use mixed precision: Accelerate training and reduce memory use with FP16 or BF16.

- Watch for memory bottlenecks: A powerful GPU with low memory bandwidth can still slow down training.

Seamless AI Development with Bitdeer AI GPU Cloud

Bitdeer AI cloud platform offers a high-performance, serverless GPU platform that supports the entire AI lifecycle from model training to inference. Developers can access cutting-edge NVIDIA GPUs like H100 and GB200 on demand, without managing physical infrastructure. With built-in support for distributed training, elastic inference services, and optimized environments for TensorFlow and PyTorch, Bitdeer makes it easy to scale AI workloads efficiently and cost-effectively.

Whether you're training large language models or deploying real-time inference APIs, Bitdeer’s unified platform accelerates development while reducing complexity making enterprise-grade AI more accessible than ever.

Final Thoughts

Understanding GPUs is a foundational step in becoming proficient in AI development. From model training to real-time inference, GPUs determine the feasibility, cost, and performance of your solutions. As AI continues to evolve, so too will GPU architectures and the earlier you get familiar with them, the better prepared you’ll be to build powerful, scalable AI systems.

Whether you're training your first neural network or scaling a production pipeline, keep learning, profiling, and experimenting. The GPU is more than hardware, it’s your AI accelerator.