Deploying Foundation Models with a Click: Exploring NVIDIA NIMs on Bitdeer AI

As artificial intelligence transitions from prompt-based tools to autonomous systems, the need for scalable and modular infrastructure becomes essential. NVIDIA Inference Microservices (NIMs) offer a streamlined solution to this challenge, enabling developers and enterprises to deploy powerful open-source foundation models quickly and reliably.

Bitdeer AI is an officially licensed partner of NVIDIA for NIMs. As such, users can directly access a curated collection of NVIDIA NIM containers via the Bitdeer AI platform. These containers support industry-leading models such as gpt-oss-120b, LLaMA 4, Mixtral and various retrieval-based embedding models. Deployment is effortless, requiring only a few clicks within the Bitdeer AI Studio interface.

What Are NVIDIA NIMs

NVIDIA NIMs are containerized inference microservices that package pretrained AI models with all necessary dependencies and optimized runtimes into a single, deployable unit. As part of the NVIDIA AI Enterprise suite, these prebuilt Docker containers are designed for efficient inference on GPU infrastructure. Each NIM exposes industry-standard APIs for seamless integration into AI applications and development workflows, and is optimized for response latency and throughput based on the specific foundation model and GPU combination.

With NIMs, developers avoid the complexities of setting up runtime environments, managing model dependencies, or configuring inference servers manually. Each container is production-ready and optimized using NVIDIA's inference technologies such as TensorRT and Triton. This allows for low-latency, high-throughput serving of large language models, vision-language models, and embedding pipelines.

source: from NVIDIA

Deployment Use Cases

NVIDIA NIMs unlock a wide range of enterprise-grade applications across text, vision, and multimodal AI workloads:

- Enterprise Chat Assistants: Deploy instruction-tuned LLaMA-based models for internal knowledge retrieval, IT helpdesk automation, and customer support, improving response accuracy and reducing support costs.

- Vision-Language Understanding: Use models like mistral-small-3.2-24b for tasks such as image captioning, visual question answering, product tagging, and multimodal search, it’s crucial for e-commerce, media, and digital asset management.

- Content Moderation and Document Pipelines: Build scalable pipelines for real-time content moderation, document classification, translation, and batch summarization in industries like media, social platforms, and global enterprise communications.

- Multimodal Copilots for Vertical Domains: Develop domain-specific AI copilots that process and reason over multiple data types (text, images, and tabular data) for use in finance (e.g., earnings analysis), healthcare (e.g., clinical decision support), and retail (e.g., inventory and customer insights).

How to deploy NVIDIA NIMs on Bitdeer AI

As an authorized NVIDIA NIM provider, Bitdeer AI has integrated a growing library of NVIDIA NIMs directly into our Container Registry service on our AI Studio. Users can search for models, preview configurations, and launch GPU-accelerated inference pods in seconds. There is no need to manage containers, configure Kubernetes, or provision GPUs manually. No container building. No cluster management. Just deploy and go.

Supported Models on Bitdeer AI

Bitdeer AI supports a variety of models. These NIMs can be used for a wide range of tasks including text generation, multimodal understanding, document summarization, vector embedding, image-text alignment, and retrieval-augmented generation. Here are some examples:

- openai/gpt-oss-120b

- meta/llama-3.1-8b-instruct

- meta/llama-3.3-70b-instruct

- mistralai/mistral-7b-instruct-v0.3

- nvidia/nv-embedqa-e5-v5-pb25h1

- nvidia/nv-rerankqa-mistral-4b-v3

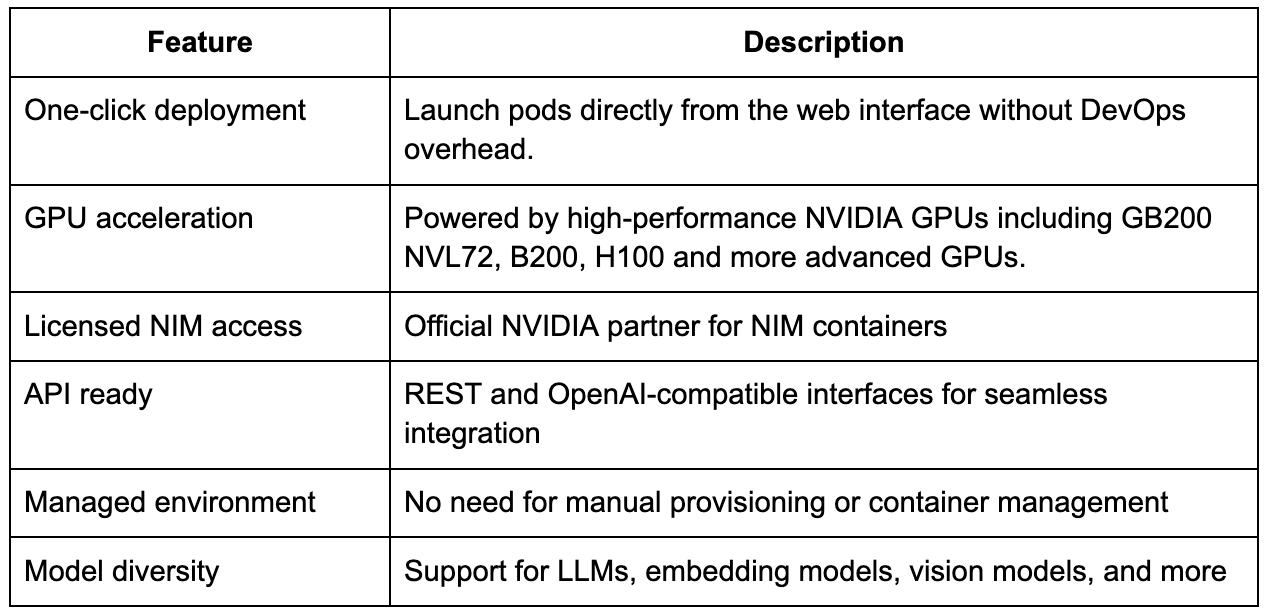

Benefits of Bitdeer AI for NIM Deployment

Bitdeer AI provides a fully managed environment to make NIM deployment fast, reliable, and accessible. It is designed to reduce operational overhead while maximizing performance across diverse workloads.

How to Get Started

To deploy a NIM container on Bitdeer AI:

- Log in to Bitdeer AI Studio

- Navigate to AI Studio and select Container Registry

- Choose a model such as openai/gpt-oss-120b

- Click Launch Pod

- Start sending requests to your dedicated endpoint

The following is a video demonstration and step-by-step guide.

Conclusion

With Bitdeer AI and NVIDIA NIMs, deploying foundation models is no longer a complicated, time-consuming process. Developers can move from model selection to production-grade inference in a matter of minutes. Whether for prototyping, batch processing, or powering intelligent applications, Bitdeer AI makes it easy to operationalize state-of-the-art models in a scalable and reliable environment.