Modern GPU Cooling for AI: From Airflow to Cold Plate Systems

As GPUs evolve from gaming accelerators to the backbone of trillion-parameter AI models, thermal design has moved from a peripheral concern to a central pillar of system architecture. With thermal design power (TDP) increasing sharply across GPU generations, cooling is no longer a simple choice between air or liquid, it now represents a complex trade-off across performance, energy efficiency, deployment density, and total cost of ownership.

Among the companies shaping this transition, NVIDIA has played a pivotal role. Its high-power GPU architectures have effectively set new thermal baselines for data center hardware, prompting a broader industry shift toward liquid cooling, particularly solutions based on cold plates and direct-to-chip heat transfer. Through platform-level design innovations and standardized thermal guidelines, NVIDIA has helped define the technical trajectory and ecosystem adoption of modern GPU cooling strategies.

Understanding the Trend: From General-Purpose to Purpose-Built Cooling

Early GPU designs relied predominantly on air cooling. These solutions comprising aluminum heatsinks, copper heat pipes, and one or two axial fans were sufficient for gaming workloads and occasional compute acceleration. However, as GPUs began to play a central role in deep learning, data analytics, and real-time inference, their power consumption surged from below 200W to 1000W and beyond.

Air cooling responded with bigger heat sinks, denser fin stacks, and more powerful fans. Blower-style designs helped exhaust hot air out of compact systems, while open shroud designs emphasized airflow within the chassis. But as TDP climbed, air cooling increasingly hit its limits in terms of noise, reliability, and cooling headroom.

In parallel, liquid cooling emerged from enthusiast circles into mainstream and enterprise use. AIO (All-in-One) systems offered better thermal performance with reduced acoustic output, ideal for high-end gaming rigs and compact workstations. For data centers, cold plate liquid cooling became a practical necessity as GPU density increased and space for airflow diminished. Today, even entire server racks can be submerged in dielectric fluid to maximize heat transfer and reduce mechanical complexity.

Cooling Principles: Air vs. Liquid Cooling

Understanding the fundamental principles behind each cooling method helps illustrate their trade-offs:

Air Cooling System:

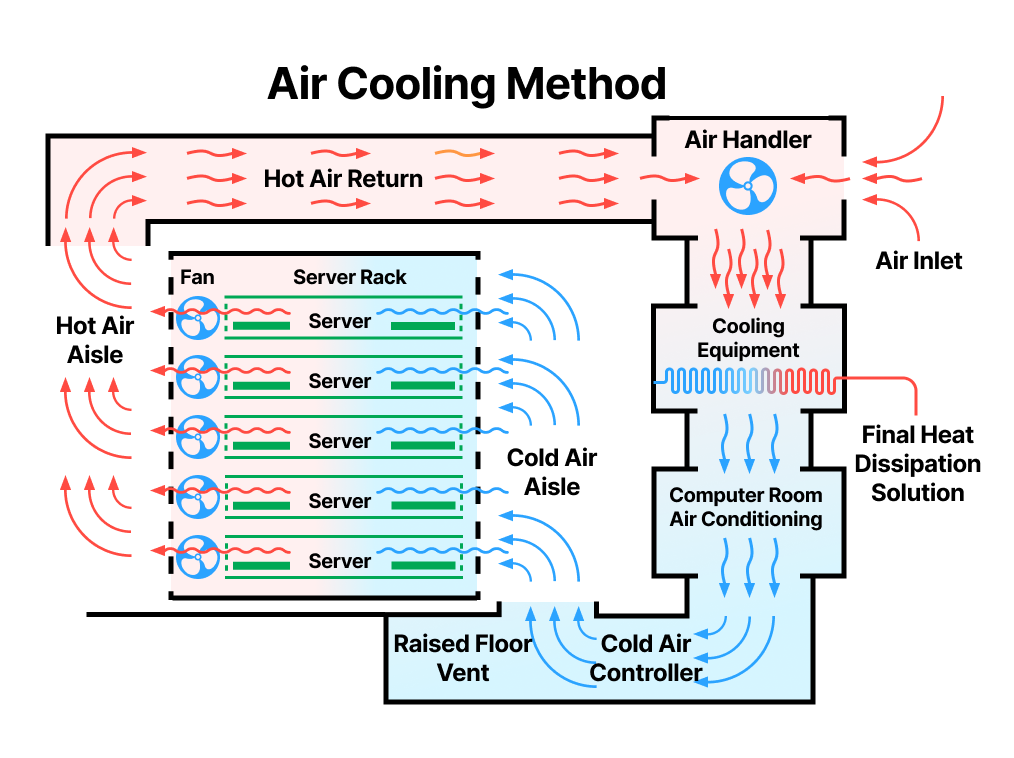

The core heat dissipation pathway in an air-cooled system involves three key stages:

- Conductive Contact: Heat is initially transferred from the GPU chip via direct contact with a precisely machined metal base,typically made of copper or aluminum leveraging the high thermal conductivity of these metals.

- Heat Pipe Transport: Working fluid inside vacuum-sealed heat pipes undergoes a phase change (liquid to vapor) to rapidly transfer heat to the fin array.

- Forced Convection: Axial or centrifugal fans drive airflow across the fin array to dissipate the heat into the surrounding environment.

Air cooling efficiency is influenced by multiple factors. Aerodynamic design directly affects thermal performance, including airflow resistance, turbulence control, and prevention of short-circuiting airflow. Ambient air temperature and density also play a key role, as high temperatures or low pressure reduce cooling effectiveness. Localized heat islands may form inside the chassis, making proper airflow layout critical. The thermal conductivity of heatsink materials is also important. Copper offers excellent conductivity but is heavier and more expensive, while aluminum alloys provide a good balance between weight and cost.

The following diagram illustrates the architecture of the air cooling system:

Liquid Cooling System:

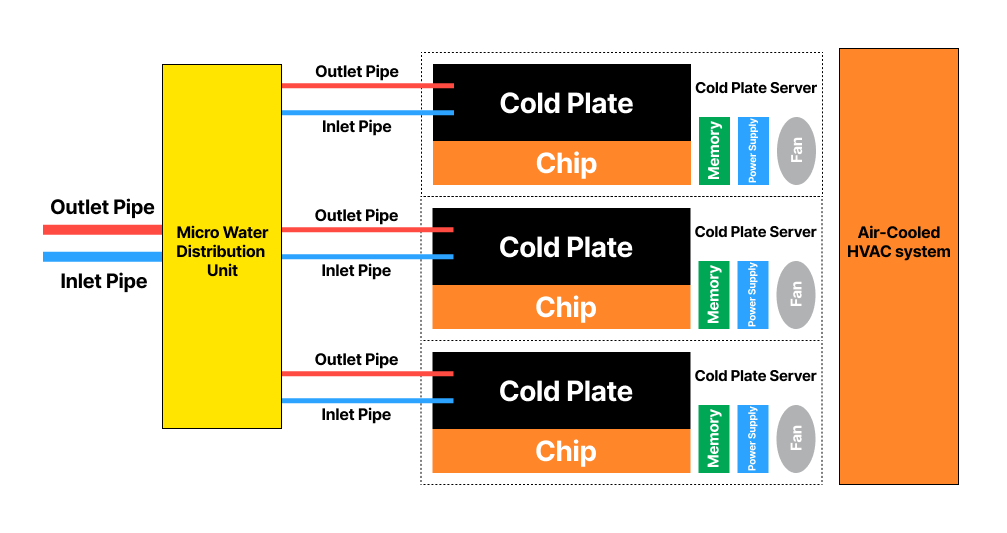

The liquid cooling system utilizes a two-stage heat transfer architecture:

- Primary Heat Exchange: A microchannel cold plate captures heat directly from the GPU through physical contact, often enhanced with a liquid metal interface material. The coolant, typically PG25, a mixture of 25% propylene glycol and 75% water (usually deionized or softened) is circulated under pump-driven turbulence. Its high specific heat capacity enables efficient heat absorption.

- Secondary Heat Dissipation: The heated coolant then flows through a low-resistance heat exchanger, where the heat is dissipated via either:

- Air-to-liquid convective heat exchange (e.g., radiator with fan-assisted airflow), or,

- Phase-change heat transfer in immersion cooling systems.

The performance advantage of liquid cooling lies in the physical properties of the coolant, which has a much higher volumetric heat capacity than air, enabling greater heat transfer per unit volume. Liquid systems can also cool below ambient temperatures when using refrigerants. Despite engineering challenges such as increased energy consumption from viscosity, cavitation, leakage, and complex maintenance, liquid cooling is increasingly becoming the mainstream solution for high-power computing, including HPC and AI accelerators.

The following illustrates how cold plate liquid cooling works:

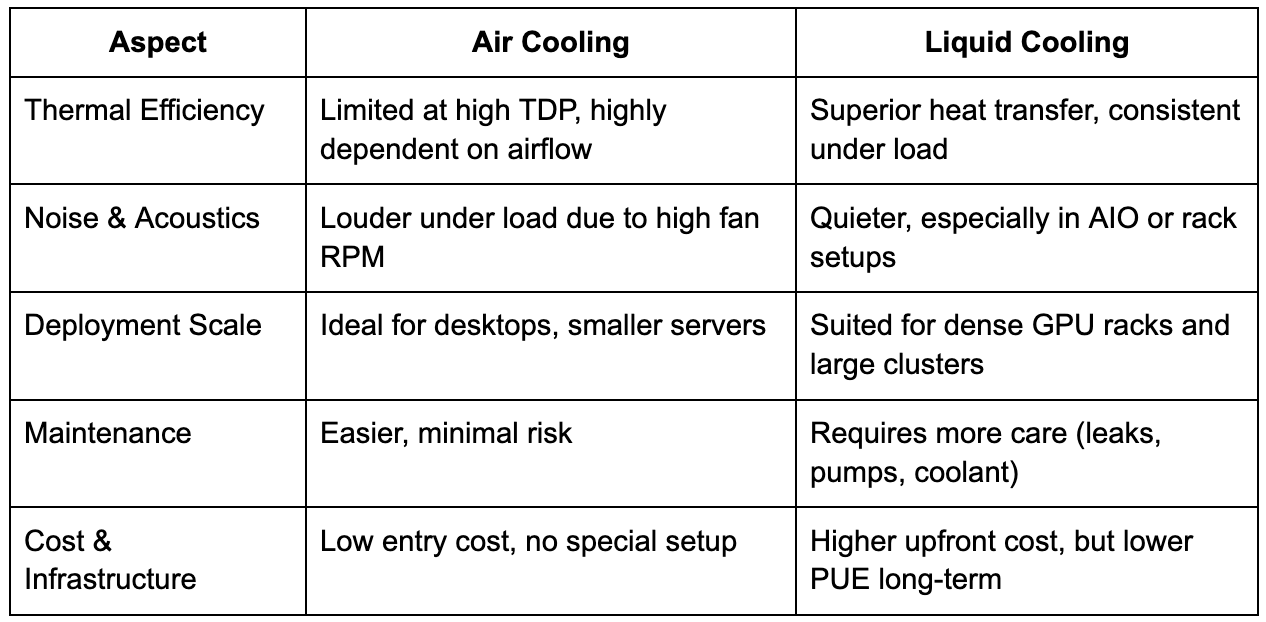

After understanding the basic working principles of air and liquid cooling, we can more clearly compare their performance across key operational aspects. These differences directly impact system design choices in AI infrastructure.

Air Cooling vs Liquid Cooling: Key Differences

The core distinction lies in how heat is moved: air cooling dissipates heat into surrounding air, while liquid cooling transfers heat via a coolant to a radiator or manifold. The latter is inherently more efficient due to the higher thermal conductivity and specific heat capacity of liquids.

Why This Matters for AI Infrastructure

As AI models grow in complexity, so does the demand for sustained GPU performance. Thermal throttling can result in lower training throughput, unstable inference latency, and even hardware failure in long-duration workloads. Efficient cooling ensures:

- GPUs operate at full performance without thermal-induced throttling

- Higher density per rack, maximizing facility-level compute per square foot

- Improved system lifespan and reliability under 24/7 workloads

- Reduced total power consumption through better thermal management

Cloud service providers and hyperscale data centers have already standardized on cold plate liquid cooling for their AI clusters. Some are experimenting with immersion cooling to further lower operational complexity and improve thermal efficiency.

Case Study: Cooling Architecture of NVIDIA GB200 NVL72 at Bitdeer AI Data Center

At Bitdeer AI’s GPU data center, we deployed NVIDIA’s GB200 NVL72 system, one of the highest compute-density GPUs currently available. Each rack integrates up to 72 Blackwell GPUs and 36 Grace CPUs. With each GPU consuming over 1000 watts at peak load, traditional air cooling can no longer meet the thermal demands.

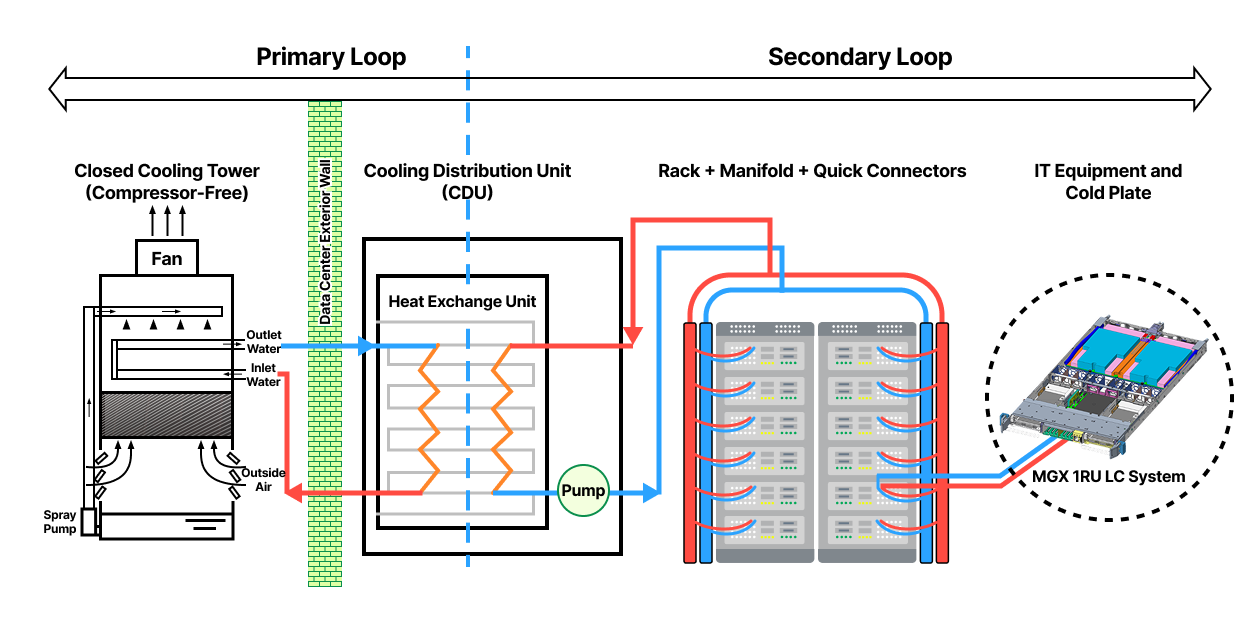

To support such high heat density, the NVL72 rack uses a hybrid cooling architecture combining 80 percent cold plate liquid cooling with 20 percent air cooling:

- Liquid Cooling System: The GPU cores employ a single-phase cold plate liquid cooling solution. Each GPU is equipped with an efficient cold plate, transferring heat directly via coolant to the Cooling Distribution Unit (CDU). The heat is then transferred outdoors through a closed-loop system. This system comprises pumps, manifolds, the CDU, and liquid cooling piping, forming an efficient and stable heat exchange path.

- Air Cooling Assistance: Air cooling is used to provide supplementary heat dissipation for non-core components such as power modules and input/output areas, enhancing overall thermal balance and structural flexibility.

This hybrid cooling architecture enables stable operation of GPU clusters under high-density deployment, effectively avoiding throttling and instability caused by thermal bottlenecks. It also excels in power management, operational efficiency, and sustainability, making it a critical enabler for next-generation AI infrastructure. The cold plate liquid cooling solution requires no major modifications to server structure and offers maturity, ease of deployment, and maintenance, making it the mainstream choice for large-scale GPU cluster deployments.

Below is an overview of the liquid cooling architecture principles.

Conclusion

The evolution of GPU cooling reflects the development trajectory of AI technology. Moving from auxiliary design to system-level architectural integration, cooling solutions have long ceased to be optional features. They are now critical factors affecting training efficiency, energy consumption control, and infrastructure sustainability. In an era where large models drive exponential growth in computing power, cooling capacity not only determines whether individual GPUs can fully unleash their performance but also dictates rack deployment density, data center power limits, and operational cost structures. The choice between air cooling and liquid cooling is no longer a matter of technical preference but a systemic decision spanning architecture design, operations strategy, and long-term return on investment.

Bitdeer AI prioritizes performance and efficiency by deploying a hybrid cooling architecture that combines cold plate liquid cooling with air cooling in the NVL72 system. This approach enables high-density, low-power AI clusters. We believe that AI computing competition extends beyond chips to overall system engineering. As AI rapidly transforms industries and society, cooling systems must evolve alongside hardware to ensure reliable thermal management and support the future of AI infrastructure.