Small Language Models vs Large Language Models: Power, Practicality, and the Future of Agentic AI

As language models become increasingly central to modern AI applications, particularly within agentic systems, the ongoing pursuit of ever-larger models is prompting a new question: could smaller models provide a smarter and more efficient alternative? While Large Language Models (LLMs) are widely praised for their broad capabilities, growing evidence suggests that Small Language Models (SLMs) may offer a more scalable, cost-effective, and sustainable solution, especially for agents focused on specialized or repetitive tasks.

This article explores the fundamental differences between SLMs and LLMs, their strengths, and why there is growing attention on SLMs in the context of agentic AI.

Definitions: SLM vs LLM?

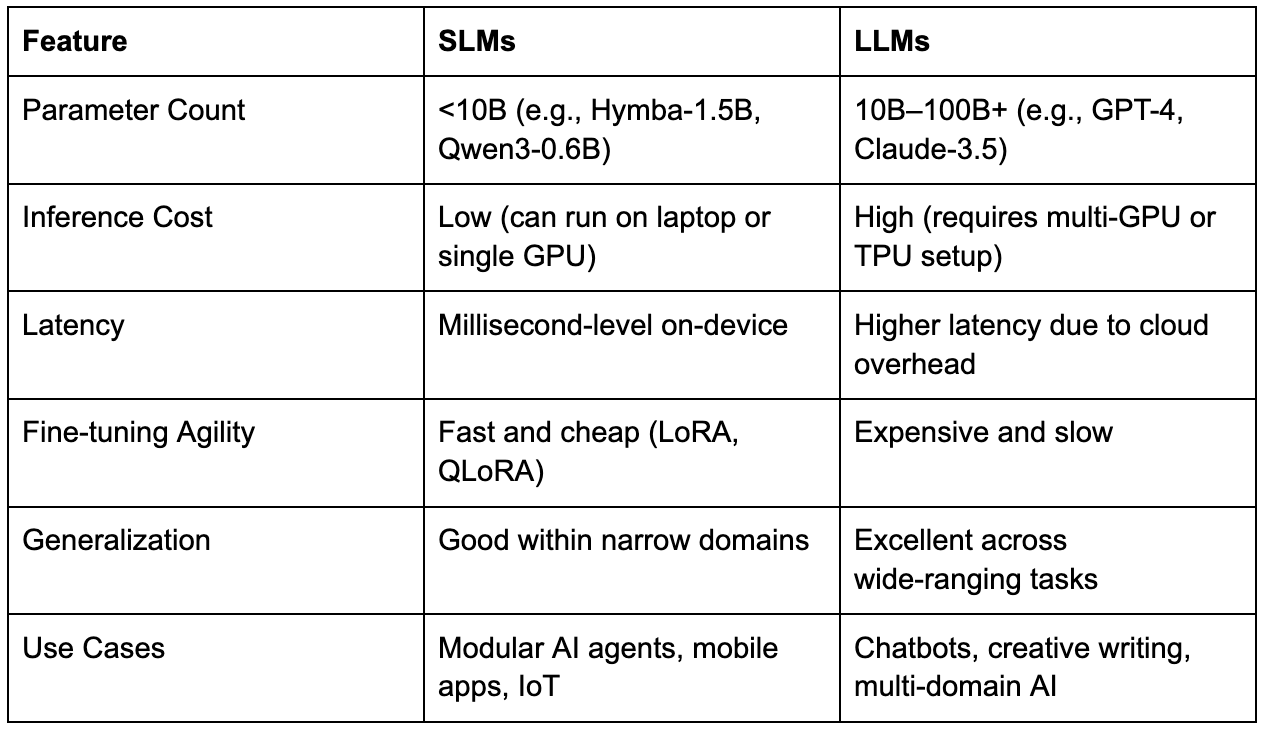

There is no absolute line between "small" and "large" in language model taxonomy, but a useful working distinction lies in deployment footprint:

- Small Language Models (SLMs) are language models trained to perform well on specific domains or tasks, often optimized for efficiency and fast inference in narrowly defined contexts. They are designed to run on less resource-intensive infrastructure, such as consumer-grade GPUs or edge devices, making them ideal for latency-sensitive or cost-constrained environments.

- Large Language Models (LLMs), by contrast, are trained on broad, diverse datasets to handle a wide range of general-purpose language tasks. These models typically require datacenter-scale infrastructure for training and inference, and are well-suited for applications demanding open-ended reasoning, broad knowledge coverage, and multi-domain versatility.

In essence, SLMs prioritize specialization and efficiency, while LLMs emphasize generality and scale, each serving different roles in the evolving AI landscape. Despite these differences, both share the same underlying architecture and goal: to understand and generate human language effectively in service of intelligent applications.

There is currently no fixed standard; for now, we follow NVIDIA’s definition: as of 2025, most models with fewer than 10 billion parameters can be considered SLMs, while the rest are naturally classified as LLMs. The following summarizes a comparison of key features between SLMs and LLMs.

Performance in Inference: Are SLMs Good Enough?

Thanks to architectural innovations and training strategies, SLMs today rival older generations of LLMs on many tasks. Benchmarks cited in the NVIDIA paper show that:

- Phi-2 (2.7B) achieves reasoning and coding performance on par with 30B models [1].

- NVIDIA Hymba-1.5B outperforms 13B LLMs in instruction-following accuracy [1].

- Huggingface’s SmolLM2 models (125M–1.7B) achieve language understanding on par with much larger models. [1].

What makes this more compelling is the nature of agentic AI: most tasks involve predictable, narrow domains like parsing emails, scheduling, summarizing text, or calling APIs. For these workflows, small, specialized models are often more effective than singular large generalist models [1].

Market Trends: SLMs and the Shift Toward Agentic AI

The global small language model (SLM) market is expected to grow from USD 0.93 billion in 2025 to USD 5.45 billion by 2032, with a compound annual growth rate of 28.7 percent (MarketsandMarkets). This growth reflects the increasing need for efficient AI models that can operate locally, especially in edge environments such as smartphones, sensors, and embedded systems.

As AI agents become more widely used in real-time and privacy-sensitive applications, SLMs provide a practical alternative to large models. Their ability to run specialized tasks with low latency and lower infrastructure requirements makes them well-suited for scalable and adaptive agentic architectures.

The Case for SLMs in AI Agent Architectures

AI agents increasingly rely on language models to manage structured workflows, from input parsing to tool orchestration. While large models excel at open-ended reasoning, many agent tasks are narrow, repetitive, and time-sensitive making them better suited for small language models (SLMs).

SLMs can be fine-tuned for specific subtasks and deployed with lower latency and cost. Their efficiency and adaptability make them ideal for modular agent designs, especially in business scenarios where performance, control, and scale matter.

As Small Language Models are well-suited for business scenarios that require speed, predictability, and domain-specific reasoning, the following section explores real-world examples of how SLMs are being applied across industries to solve practical problems. Below are some practical use cases where SLMs offer clear advantages:

- Customer Service Automation

- Classifying support tickets based on intent or urgency

- Filling structured forms from free-text inputs

- Generating consistent, formatted replies to common queries

- Financial Services

- Extracting KYC (Know Your Customer) information from documents

- Tagging and annotating transactions in real time

- Summarizing compliance documents with strict formatting requirements

- Retail and E-commerce

- Handling product inquiries and order status updates via chatbots

- Performing price or inventory lookups on edge devices in-store

- Automating FAQ responses with fast, offline-capable agents

- Healthcare and Legal

- Summarizing clinical notes or legal contracts

- Redacting sensitive information before storage or sharing

- Classifying clauses or sections in structured documentation

Looking Ahead: The Future of SLMs and LLMs

The future is not a zero-sum game between SLMs and LLMs. Instead, we’re likely to see:

- LLMs continuing to power high-stake, open-domain reasoning in the cloud.

- SLMs dominating edge, mobile, and embedded use cases and forming the bulk of agentic invocations [1].

The trajectory of language model deployments is not a contest between small and large models, but a shift toward intelligent, purposeful orchestration. LLMs continue to play a vital role in complex tasks that require knowledge breadth and contextual depth, especially in the cloud. However, SLMs are emerging as a practical engine behind the majority of agentic operations running close to the user, enabling high responsiveness, and reducing dependence on centralized compute.

As agentic AI becomes more personalized, distributed, and integrated into daily workflows, the demand for fast, adaptable, and efficient models will grow. SLMs will then not just be merely lightweight alternatives; they become the foundational building blocks for the next generation of intelligent systems.

What Can Bitdeer AI Offer?

While you may have resources to run light workloads with SLMs, let us handle the heavy reasoning for you. Bitdeer AI provides scalable, high-performance infrastructure for deploying and serving foundation models, particularly large language models (LLMs) across cloud, hybrid, and enterprise environments. With pre-containerized model services, optimized GPU clusters, and support for popular open-source models like LLaMA 3, Mistral, and DeepSeek, Bitdeer AI helps developers and enterprises accelerate time-to-inference while reducing the complexity of managing AI workloads.

Our platform is designed to support every stage of the AI lifecycle: from model exploration and prototyping, to fine-tuning and multi-node deployment, to real-time inference with low-latency requirements. With a modular agent-ready architecture and full compatibility with modern MLOps stacks, Bitdeer AI enables organizations to build flexible, production-grade AI systems tailored to their needs be it in finance, customer service, research, and beyond.

Conclusion

SLMs and LLMs each bring distinct value to the AI ecosystem. With the emergence of complex agentic applications, especially those that must run at the edge or scale economically, small models offer a compelling blend of power and practicality.

While the rise of SLMs is notable, LLMs remain the preferred choice, providing broader, more comprehensive knowledge to tackle a diversity of tasks. In agentic AI development, the most effective approach combines both: LLMs deliver overarching reasoning and context, while SLMs offer efficiency, adaptability, and task-specific agility. This complementary strategy allows AI systems to achieve both scale and sophistication for real-world applications.

Reference:

[1] Peter Belcak et al., Small Language Models are the Future of Agentic AI, arXiv:2506.02153v1, June 2025. https://arxiv.org/abs/2506.02153