Traditional Data Centers vs AI Data Centers: How Infrastructure Is Evolving to Support AI at Scale

Data centers have always reflected the dominant computing paradigm of their time. For many years, enterprise software, web services, and databases shaped how infrastructure was designed and operated. These workloads emphasized reliability, steady performance, and efficient resource sharing.

Artificial intelligence introduces a fundamentally different demand profile. Training and deploying modern AI systems requires large-scale parallel computation, rapid data movement, and significantly higher power density. As a result, a new class of infrastructure has emerged alongside traditional facilities: the AI data center. This article explains how traditional data centers and AI data centers differ in design, application, and business value.

What Is a Traditional Data Center?

A traditional data center is built to support a wide variety of general-purpose computing workloads. Its architecture prioritizes flexibility and operational stability across many applications.

Core characteristics include:

- CPU-focused compute, optimized for sequential and transactional processing

- Moderate power density, typically compatible with air-based cooling

- General-purpose networking, designed primarily for client-to-server traffic

- Multi-tenant workload support, often using virtualization technologies

This model remains well-suited for enterprise systems, web platforms, and business-critical applications that require predictable performance and high availability.

What Is an AI Data Center?

An AI data center is engineered specifically to support machine learning and artificial intelligence workloads. These environments are designed around accelerators rather than general-purpose processors.

Key attributes include:

- GPU or accelerator-centric clusters designed for parallel computation

- High power and thermal density, requiring advanced cooling approaches

- High-bandwidth, low-latency interconnects to support intensive data exchange

- Tightly integrated compute, storage, and networking to minimize bottlenecks

Instead of serving many unrelated workloads, AI data centers are optimized to run fewer but extremely compute-intensive jobs efficiently. Unlike the standard Ethernet used in traditional centers, AI hubs often employ specialized InfiniBand or RoCE (RDMA over Converged Ethernet) fabrics to ensure the network doesn't become a bottleneck for the GPUs.

Data Center Tier Classification: Tier I, Tier II, and Tier III

Before comparing traditional data centers and AI data centers, it is important to understand the commonly used data center tier classification system. Tier levels are widely used to describe a data center’s reliability, redundancy, and maintainability, rather than its computing performance.

Tier I Data Center

A Tier I data center represents the most basic level of data center infrastructure. It typically relies on a single path for power and cooling, with little or no redundancy. Planned maintenance or unexpected failures usually require a full system shutdown. Tier I facilities are best suited for non-critical workloads where availability requirements are minimal.It has an expected uptime of 99.671% (28.8 hours of downtime annually).

Tier II Data Center

Tier II data centers introduce limited redundancy in key components such as power supplies and cooling systems. While backup components exist, the infrastructure generally still operates on a single distribution path. Tier II improves reliability compared to Tier I, but maintenance activities may still cause service interruptions. This tier is commonly used for workloads with moderate availability requirements. It has an expected uptime of 99.741% (22 hours of downtime annually).

Tier III Data Center

Tier III data centers are designed with concurrently maintainable infrastructure. Critical systems typically follow an N+1 redundancy model and support multiple power and cooling paths, allowing maintenance to be performed without interrupting operations. Tier III is widely adopted for enterprise-grade and cloud data centers and is suitable for mission-critical applications, including many AI workloads.It has an expected uptime of 99.982% (1.6 hours of downtime annually).

It is important to note that tier classification reflects infrastructure reliability rather than workload type or computing capability. AI data centers are often built on Tier III or higher standards, with additional design considerations for high-density power delivery and advanced cooling.

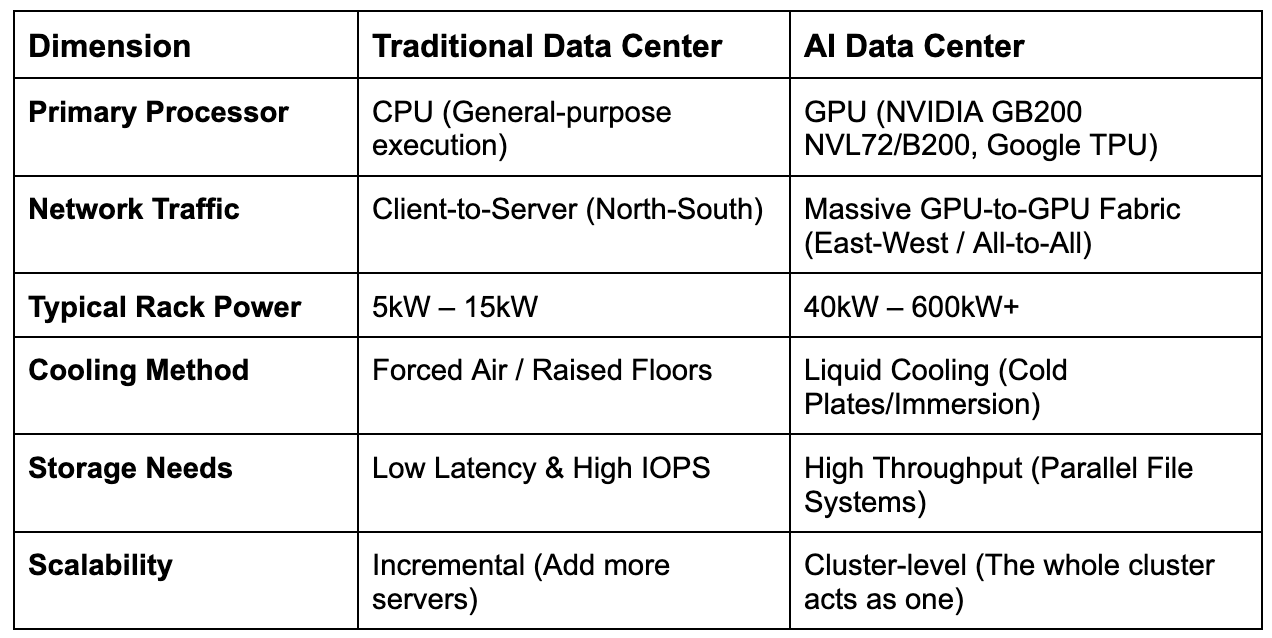

Architectural and Operational Analysis

The architectural gap between traditional and AI data centers has widened rapidly. CPUs are designed for versatility, but AI workloads demand parallel execution across thousands of identical operations. GPUs and AI accelerators fill this gap, but they also introduce new constraints.

AI data centers must address:

- Power delivery challenges, as high-density racks draw dramatically more energy

- Thermal management, where air cooling alone is insufficient

- Network congestion, since model training depends on constant GPU-to-GPU communication

- Data locality, ensuring storage and compute remain tightly coupled

Operationally, this shifts the focus from managing virtual machines to managing clusters as a single system. Operational success is no longer measured only in utilization rates, but in training time, inference latency, and performance per watt.

Below is a key comparison between Traditional Data Center and AI Data center:

Business Use Cases and Industry Impact

AI data centers are rapidly becoming a core source of competitive advantage for modern businesses. They enable organizations to move beyond isolated AI experiments toward large-scale model training, high-throughput inference, and AI agents that automate complex workflows such as customer support, software development, and data analysis. The ability to scale compute, data movement, and power density directly influences speed of innovation and time-to-market.

Across industries, AI data centers are reshaping how value is created. Financial institutions use them for real-time risk modeling and fraud detection, healthcare organizations for advanced medical imaging and diagnostics, and manufacturers for simulation, digital twins, and predictive maintenance. These AI-driven workloads are increasingly mission-critical rather than experimental.

As AI adoption accelerates, businesses are aligning infrastructure strategy around AI data centers as long-term strategic assets. Rather than serving as supporting systems, they are becoming the primary engines for innovation, operational efficiency, and competitive positioning, defining how quickly and effectively organizations can scale intelligence across their operations.

Conclusion

As AI systems grow in scale and complexity, infrastructure decisions are no longer just technical choices but strategic ones. The ability to combine cloud flexibility with purpose-built AI data center design is becoming essential for organizations that want to deploy advanced AI reliably and efficiently.

At Bitdeer AI, we build our cloud platform around this reality. By leveraging our group’s global infrastructure resources, including power capacity and data center sites, and combining them with flexible cloud capabilities, we provide an AI cloud foundation designed specifically for advanced workloads on advanced NVIDIA GPUs.This integrated approach enables scalable AI deployment while maintaining performance, energy efficiency, and operational resilience. We allow our customers to focus on building and deploying intelligence, while we manage the underlying AI infrastructure securely, sustainably, and at scale.