Faster Scale.Smarter Returns.

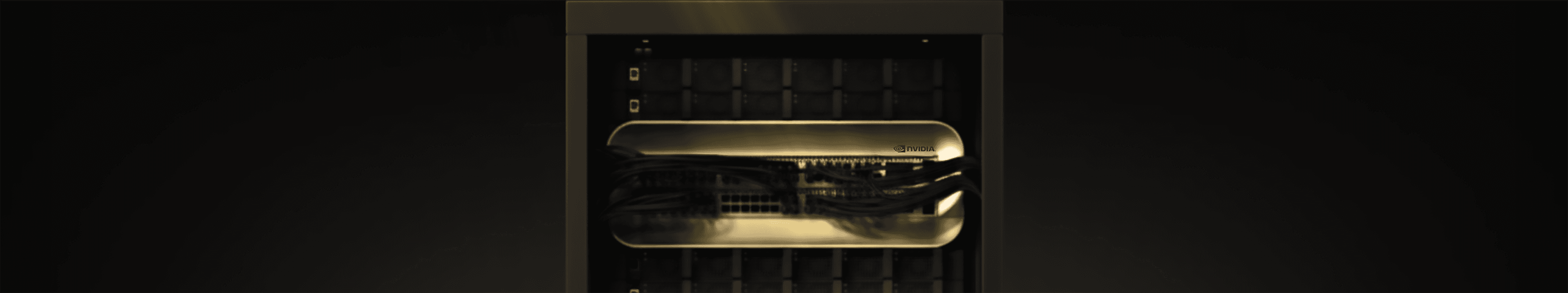

Scale without limits, innovate without compromise. NVIDIA GB200 NVL72 is here to maximize your AI returns. Deploy today and lead the change.

Supercharging Next-GenerationAI and Accelerated Computing

BREAKTHROUGH TRAINING & INFERENCE EFFICIENCY

Up to 30× faster inference and significantly shorter training cycles compared to previous generations.

High-Bandwidth, Low-Latency Interconnect

With 130 TB/s NVLink bandwidth across GPUs and CPUs, it unlocks large-scale distributed training and multi-node workloads.

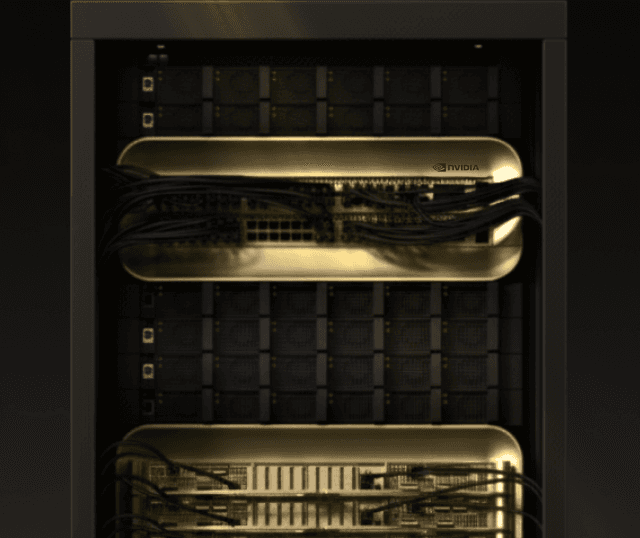

Sustainable, Liquid-Cooled Design

Advanced liquid cooling reduces energy and operational costs while supporting dense deployments at scale.

Massive Memory Capacity

Powered by HBM3e, with memory measured in terabytes and extreme bandwidth to handle trillion-parameter models and long-context inference.

The Journey to Next-Gen Performance

Experience the unseen story of NVIDIA GB200 NVL72, where every step reflects the innovation, precision, and scale driving the future of AI infrastructure.

Redefining AI Infrastructure.

Enter the Blackwell Era.

Scaling Enterprise AI Models

Shorten time-to-market for foundation models and domain-specific LLMs. NVIDIA GB200 NVL72 accelerates training and fine-tuning, helping businesses deploy competitive AI products faster.

Powering AI Agents at Scale

Support enterprise-wide AI copilots and assistants with real-time inference and long-context reasoning. This enables employees and customers to access intelligent support instantly and reliably.

Driving Multimodal Applications

Run advanced applications that combine text, images, video, and audio. NVIDIA GB200 NVL72 provides the performance and bandwidth required for smarter search, compliance automation, and richer customer experiences.

Accelerating Data-Intensive Decisions

Process massive datasets in near real time for forecasting, risk analysis, and scenario planning. Enterprises can make faster, more confident decisions powered by AI-driven insights.

Use cases

Finance and Banking

Accelerate risk modeling/fraud detection, and algorithmic trading with ultra-low latency and high throughput for faster, more accurate decisions.

Healthcare and Life Sciences

Harness teraflyte-scale memory and FP64 performance to speed drug discovery, genomic analysis, and medical imaging, reducing research cycles and improving outcomes.

Manufacturing and Industrial Engineering

Leverage liquid-cooled, energy-efficient clusters to power digital twins, predictive maintenance, and generative design that enhance productivity and cut downtime.

Retail and Customer Experience

Deploy large-scale AI copilots, recommendation engines, and personalization systems that deliver real-time insights and seamless customer engagement.

NVIDIA GB200 NVL72 Technical Specs

NVIDIA GB200 NVL72

NVIDIA GB200 NVL72 Grace Blackwell Superchip

Frequently Asked Questions

Power Your AI with NVIDIA GB200 NVL72

Get unmatched performance and scale

Turn AI performance into real impact today

Certified and trusted by industry standards

(ISO/IEC 27001:2022 and SOC2 Type I & Type II)

Certified and trusted by industry standards

(ISO/IEC 27001:2022 and SOC2 Type I & Type II)